Ólafur Páll Geirsson

In September, we posted a “Roadmap towards non-experimental macros”. It’s been two months since then and I’d like to share an update on what’s been happening.

A diverse ecosystem

When starting this project, we wanted to first get an idea of how macros are used in the community. Not surprisingly, we discovered that macros are used in a variety of ways. Here are some popular use-cases we found

- code generation: to avoid writing boilerplate by hand, which is both error-prone and cumbersome. This is used by Play JSON and Spark.

- embedded DSLs: to attach new semantics to existing language syntax. This includes a wide range of applications from database query libraries like Quill, to hardware construction frameworks like Chisel and build tools like sbt.

- optimizations: to avoid expensive runtime allocations and method calls. This includes libraries like spire and log4s.

- developer ergonomics: to surface source context information like variable names, file names and line numbers. This includes libraries like ScalaTest and sourcecode.

- improved type-safety: to increase the static guarantees of your programs beyond what the Scala language can provide. This includes libraries like Refined.

The current scala-reflect macro system has in fact done a phenomenal job at accommodating these diverse use-cases under a unified API. The Scala ecosystem is rich as it is thanks to this wide range of applications that are enabled by scala-reflect macros.

To get a better feel for how common each use-case is, we performed an analysis on a corpus of 20,906 source files containing ~2 million lines of code derived from the Scala compiler community build. The corpus includes sources for a diverse set of libraries such as Akka, Cats, Scalaz, Monocle, twitter/util, Spire, Scrooge, Blaze, Algebra, fastparse, Scanamo as well as applications like ornicar/lila (https://lichess.org) and guardian/frontend (https://www.theguardian.com/). Our analysis tracks the number of call-sites to each def macro and groups them by whether the call-site comes from test or main sources. Here are the top results:

Main sources

442: org.scalactic.source.Position.here()Lorg/scalactic/source/Position;.

345: org.parboiled2.Parser#rule(Lorg/parboiled2/Rule;)Lorg/parboiled2/Rule;.

287: akka.parboiled2.Parser#rule(Lakka/parboiled2/Rule;)Lakka/parboiled2/Rule;.

242: spire.syntax.CforSyntax#cfor(Ljava/lang/Object;Lscala/Function1;Lscala/Function1;Lscala/Function1;)V.

173: spire.syntax.EqOps#`===`(Ljava/lang/Object;Lscala/Predef/$eq$colon$eq;)Z.

170: slick.util.MacroSupportInterpolation#b(Lscala/collection/Seq;)V.

156: reactivemongo.bson.Macros.handler()Lreactivemongo/bson/BSONDocumentReader;.

149: spire.syntax.CforSyntax#cforRange(Lscala/collection/immutable/Range;Lscala/Function1;)V.

141: spire.syntax.MultiplicativeSemigroupOps#`*`(Ljava/lang/Object;)Ljava/lang/Object;.

128: spire.syntax.AdditiveSemigroupOps#`+`(Ljava/lang/Object;)Ljava/lang/Object;.

123: scalaxy.debug.package.require(ZLjava/lang/String;)V.

108: play.api.libs.json.Json.format()Lplay/api/libs/json/OFormat;.

103: scala.StringContext#f(Lscala/collection/Seq;)Ljava/lang/String;.

102: play.api.libs.json.Json.writes()Lplay/api/libs/json/Writes;.

97: scala.reflect.api.Universe#reify(Ljava/lang/Object;)Lscala/reflect/api/Exprs/Expr;.

71: play.api.libs.json.Json.reads()Lplay/api/libs/json/Reads;.

56: spire.syntax.MultiplicativeGroupOps#`/`(Ljava/lang/Object;)Ljava/lang/Object;.

49: spire.syntax.AdditiveGroupOps#`unary_-`()Ljava/lang/Object;.

46: org.log4s.Logger#error(Ljava/lang/Throwable;Ljava/lang/String;)V.

41: spire.syntax.AdditiveGroupOps#`-`(Ljava/lang/Object;)Ljava/lang/Object;.

36: spire.syntax.OrderOps#compare(Ljava/lang/Object;)I.

31: com.typesafe.scalalogging.Logger#info(Ljava/lang/String;)V.

31: spire.syntax.PartialOrderOps#`<`(Ljava/lang/Object;)Z.

30: spire.syntax.PartialOrderOps#`<=`(Ljava/lang/Object;)Z.

30: scalaxy.debug.package.require(Z)V.

28: spire.syntax.ModuleOps#`*:`(Ljava/lang/Object;Lspire/algebra/Module;)Ljava/lang/Object;.

26: spire.syntax.SemiringOps#pow(I)Ljava/lang/Object;.

... truncated

Test sources

8969: org.scalatest.Assertions#assert(ZLorg/scalactic/Prettifier;Lorg/scalactic/source/Position;)Lorg/scalatest/compatible/Assertion;.

7070: org.scalactic.source.Position.here()Lorg/scalactic/source/Position;.

1263: minitest.api.Asserts#assertEquals(Ljava/lang/Object;Ljava/lang/Object;)V.

386: utest.asserts.Asserts#assert(Lscala/collection/Seq;)V.

271: minitest.api.Asserts#assert(Z)V.

270: org.scalatest.Assertions#assert(ZLjava/lang/Object;Lorg/scalactic/Prettifier;Lorg/scalactic/source/Position;)Lorg/scalatest/compatible/Assertion;.

265: org.specs2.specification.create.S2StringContextCreation#specificationInStringContext#s2(Lscala/collection/Seq;)Lorg/specs2/specification/core/Fragments;.

264: minitest.api.Asserts#assert(ZLjava/lang/String;)V.

217: scodec.bits.package.HexStringSyntax#hex(Lscala/collection/Seq;)Lscodec/bits/ByteVector;.

206: records.Rec.applyDynamic(Ljava/lang/String;Lscala/collection/Seq;)Lrecords/Rec;.

179: spire.syntax.EqOps#`===`(Ljava/lang/Object;Lscala/Predef/$eq$colon$eq;)Z.

143: org.parboiled2.Parser#rule(Lorg/parboiled2/Rule;)Lorg/parboiled2/Rule;.

135: spire.syntax.Literals#r()Lspire/math/Rational;.

115: scala.async.internal.AsyncId.async(Lscala/Function0;)Ljava/lang/Object;.

93: spire.syntax.Literals#b()B.

86: org.scalatest.Matchers#AnyShouldWrapper#shouldBe(Lorg/scalatest/words/ResultOfATypeInvocation;)Lorg/scalatest/compatible/Assertion;.

68: scala.async.Async.async(Lscala/Function0;Lscala/concurrent/ExecutionContext;)Lscala/concurrent/Future;.

65: slick.collection.heterogeneous.HList#apply(I)Ljava/lang/Object;.

55: scala.StringContext#f(Lscala/collection/Seq;)Ljava/lang/String;.

48: utest.asserts.Asserts#intercept(Lscala/runtime/BoxedUnit;Lscala/reflect/ClassTag;)Ljava/lang/Object;.

46: com.twitter.scalding.serialization.macros.LowerPriorityImplicit#primitiveOrderedBufferSupplier()Lcom/twitter/scalding/serialization/OrderedSerialization;.

43: minitest.api.Asserts#intercept(Lscala/Function0;)V.

43: org.scalatest.Matchers#StringShouldWrapper#shouldNot(Lorg/scalatest/words/CompileWord;Lorg/scalactic/source/Position;)Lorg/scalatest/compatible/Assertion;.

39: org.specs2.specification.create.AutoExamples#eg(Lscala/Function0;Lorg/specs2/execute/AsResult;)Lorg/specs2/specification/core/Fragments;.

37: records.Rec.fld(Lrecords/Rec;)Ljava/lang/Object;.

36: play.api.libs.json.Json.format()Lplay/api/libs/json/OFormat;.

33: spire.syntax.Literals#h()S.

... truncated

The complete list of results can be seen in this gist. Judging by the numbers, it seems that

- developer ergonomics macros such as

assertandsource.Positionare heavily used in test sources, an order of magnitude more than any other category of macros. This is not surprising since large test suites contain manyassertcalls. Note that we excluded the ScalaTest sources from the corpus since they heavily biased the results by adding 140,000 call-sites toscalactic.source.Position.here(). - Other popular uses of macros include optimization macros like those in spire/parboiled and code generation macros like Play JSON.

- there are not many usages of embedded DSLs in this corpus.

One caveat with this analysis is that the results are heavily biased by the choice of the corpus, which is predominantly open source libraries. Please get in touch with me if you would like to contribute more data to this corpus. My contact info is at the end of this post. We are especially interested in applications codebases rather than libraries, as well as projects making heavy use of macros for embedded DSLs.

If there is interest, I’m happy to write a guide on how to run the analysis on closed-source projects. It’s quite easy to do, and the results are anonymous excluding the signature names of the invoked def macros, which can be manually obfuscated. A large and representative corpus of “real-world” Scala codebases will help us continue to use a data-driven approach to prioritize the development of new macros.

Tree transformations are tricky

Each of the diverse macro applications listed above require different sets of

features from the macro system.

For example, code generation macros typically only require inspection on existing types

and creation of new terms.

Embedded DSLs on the other hand typically require inspection and transformation of

existing terms created by the compiler.

Optimization macros like those in log4s may even be avoided with improvements

to alternative language features like inline.

Some features of the macro system appear to be relatively simple to support across different compilers. In the scalacenter/macros repository, we have a prototype macro system that runs on both Scala 2.x and Dotty. In only a few days, we got some interesting macros working for both compilers: a JSON automatic serializer for case classes and most of the sourcecode macros. That pretty exciting news for code generation macros and a subset of developer ergonomics macros. Inspection on types and creation of new terms doesn’t appear to introduce deep technical road-blockers! However, we struggled to implement some other macros like utest assert since it requires the ability to transform trees.

Macros that transform trees produced by the compiler are demanding on the macro system. When a term is transformed, the macro library or the macro system must ensure that internal invariants imposed by the compiler are respected. For example, the Scala compiler assumes that typed tree nodes cannot have untyped children. In scala-reflect, the burden is on the macro author to ensure this invariant holds. Mistakes from mixing untyped and typed tree nodes result in cryptic compiler crashes. In the new macro system, we want to avoid exposing such bad experience to macro authors and library users.

This observation that tree transformation are difficult to support doesn’t imply that new macros won’t support them. It simply means that we need give this problem more time and thought so that we can come up with a robust solution. So far, we have seen two promising ideas to address or circumvent this problem, one by Martin Odersky and one by Lionel Parreaux.

Principled Metaprogramming for Scala

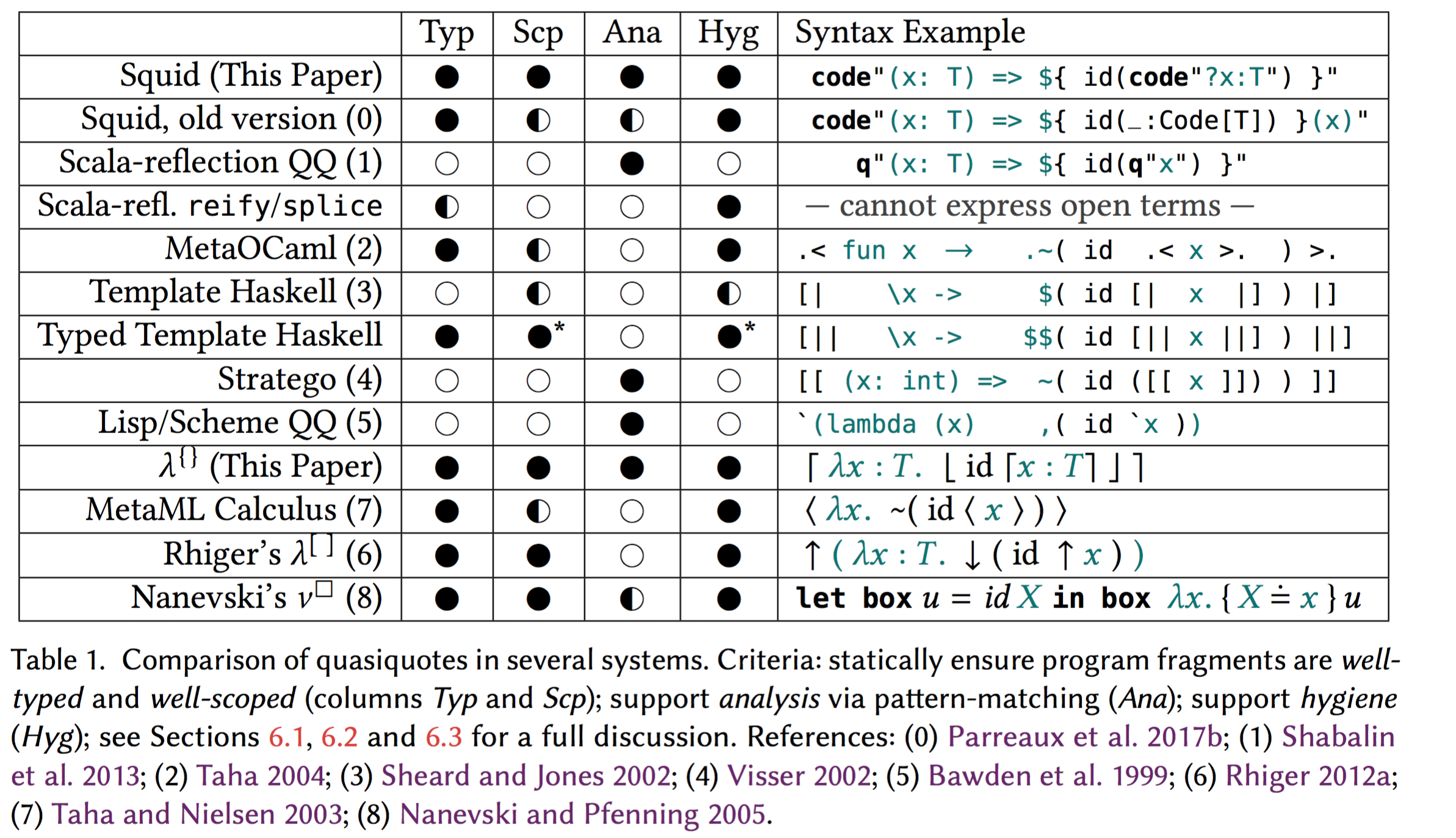

Martin Odersky recently shared a gist on Principled Metaprogramming for Scala. The design proposed in this gist is quite close to MetaOCaml as well as to a calculus by Davies and Pfenning inspired by modal logic, see “A modal analysis of staged computation”.

This proposal has triggered a rich discussion in the gist, which you may find interesting. However, the proposal may go too far from current scala-reflect macros complicating migration. Quoting Martin Odersky from r/scala

One attractive aspect of the system is that it works equally well for staging and for macros. But as a macro system it is quite restrictive because it imposes a strong typing discipline, only works for full expressions, and in its original version does not allow for code inspection. Lionel’s Parreaux’s Squid system (paper to appear at upcoming POPL) is more powerful in that it does allow inspection.

Lionel Parreaux (@LPTK) is a PhD student at EPFL who has been working on related problems for the past couple years as part of his research on the intersection of database systems, programming languages and large-scala data analysis.

Squid: reusable and type-safe quasiquotes

Squid is a metaprogramming framework that facilitates the type-safe manipulation of Scala programs through quasiquotes. Unlike scala-reflect quasiquotes, Squid quasiquotes are statically guaranteed to produce well-typed, well-scoped and hygienic programs. The properties of Squid quasiquotes make them ideal for robust and safe transformation of trees, the exact problem we struggled with in our prototype macro system.

Squid quasiquotes reject programs with references to unbound identifiers

by statically tracking the context/scope in the type of the quasiquotes.

For example, code"(x: Int) + 1" would be rejected at compile-time since x

is undefined.

Instead, the quasiquote must explicitly annotate free variables using question

marks code"(?x: Int) + 1).

Free variables are then tracked in the second parameter of quasiquote’s type

Code[Int, {val x: Int}].

To learn more about Squid works, I highly recommend reading their upcoming POPL 2018 paper “Unifying Analytic and Statically-Typed Quasiquotes”. Here is just one interesting table from the paper that I want to include here

There are still many open questions on how to incorporate Squid into the new macros. For example,

- Squid quasiquotes eagerly convert compiler trees to Squid’s IR. This may introduce problems for syntax like patterns and important metadata may get lost like positions or attachments.

- Squid currently does not support the ability to define methods or classes in quasiquotes.

I believe these problems are solvable, and we are discussing with Lionel and colleagues from his lab about potential collaborations. If successful, the new Scala macros may have potential to push the state-of-the-art in metaprogramming.

Conclusion

There remain many open challenges in the design of the new macros. I believe there is a lot for us to learn from related research in this field. Our next step is to continue the work in the scalacenter/macros repository, which implements the building blocks that are required to support higher-level frameworks such as Squid. These building blocks include:

- a rich compile-time reflection API to query for properties of types, symbols and trees.

- a standard syntax to declare and implement def macros, which will be similar but distinct from the syntax to define scala-reflect def macros.

If you are interested to join the effort, don’t hesitate to get in touch! You can reach me via email ([email protected]) or @olafurpg on Twitter.